AI Overviews, the next evolution of Search Generative Experience, will roll out in the U.S. starting May 14 and in more countries soon, Google announced during the Google I/O developer conference at the Shoreline Amphitheater in Mountain View, CA. Google showed several other changes coming to Google Cloud, Gemini, Workspace and more, including AI actions and summarization that can work across apps — opening up interesting options for small businesses.

Google’s Search will include AI Overviews

AI Overviews is the expansion of Google’s Search Generative Experience, the AI-generated answers that appear at the top of Google searches. You may have seen SGE in action already, as select U.S. users have been able to try it since last October. SGE can also generate images or text. AI Overviews adds AI-generated information to the top of any Google Search Engine results.

With AI Overviews, “Google does the work for you. Instead of piecing together all the information yourself, you can ask your questions” and “get an answer instantly,” said Liz Reid, Google’s vice president of Search, during I/O.

By the end of the year, AI Overviews will be available to over a billion people, Reid said. Google wants to be able to answer “ten questions in one,” linking tasks together so the AI can make accurate connections between information; this is possible through multi-step reasoning. For example, someone could ask not only for the best yoga studios in the area, but also for the distance between the studios and their home and the studios’ introductory offers. All of this information will be listed in convenient columns at the top of the Search results.

Soon, AI Overviews will be able to answer questions about videos provided to it, too.

Availability of AI Overview

AI Overviews is rolling out starting May 14 in the U.S., with availability for everyone in the country expected within the week. Availability in other countries is “coming soon.” Previously, AI Overviews had been available in Search Labs.

Is AI Overviews useful?

Does AI Overviews actually make Google Search more useful? AI Overviews may dilute Search’s usefulness if the AI answers prove incorrect, irrelevant or misleading. Google said it will carefully note which images are AI generated and which come from the web.

Gemini 1.5 Pro gets upgrades, including a 2 million context window for select users

Google is expanding Gemini 1.5 Pro’s context window to 2 million for select Google Cloud customers. To get the wider context window, join the waitlist in Google AI Studio or Vertex AI.

The ultimate goal for the teams working on expanding Google Gemini’s context window is “infinite context,” Google CEO Sundar Pichai said.

Google’s large language model Gemini 1.5 Pro is getting quality improvements for the API and a new version, Gemini 1.5 Flash. New features for developers in the Gemini API include video frame extraction, parallel function calling and context caching. Native video frame extraction and parallel function calling are available now; context caching is expected to drop in June.

Available today globally, Gemini 1.5 Flash is a smaller model focused on responding quickly. Users of Gemini 1.5 Pro and Gemini 1.5 will be able to input information for the AI to analyze in a 1 million context window.

Gemma 2 comes in 27B parameter size

Google’s small language model, Gemma, will get a major overhaul in June. Gemma 2 will have a 27B parameter model in response to developers requesting a bigger Gemma model that is still small enough to fit inside compact projects. Gemma 2 can run efficiently on a single TPU host in Vertex AI, Google said.

Plus, Google rolled out PaliGemma, a language and vision model for tasks like image captioning and asking questions based on images. PaliGemma is available now in Vertex AI.

Gemini summarization and other features will be attached to Google Workspace

Google Workspace is getting several AI enhancements, which are enabled by Gemini 1.5’s long context window and multimodality. For example, users can ask Gemini to summarize long email threads or Google Meet calls.

Gemini will be available in the Workspace side panel next month on desktop for businesses and consumers who use the Gemini for Workspace add-ons and the Google One AI Premium plan. The Gemini side panel is now available in Workspace Labs and for Gemini for Workspace Alpha users.

Workspace and AI Advanced customers will be able to use some new Gemini features going forward, starting for Labs users this month and generally available in July:

- Summarize email threads.

- Run a Q&A on your email inbox.

- Use longer suggested replies in Smart Reply to draw contextual information from email threads.

Gemini 1.5 can make connections between apps in Workspace, such as Gmail and Docs. Google Vice President and General Manager for Workspace Aparna Pappu demonstrated this by showing how small business owners could use Gemini 1.5 to organize and track their travel receipts in a spreadsheet based on an email. This feature, Data Q&A, is rolling out to Labs users in July.

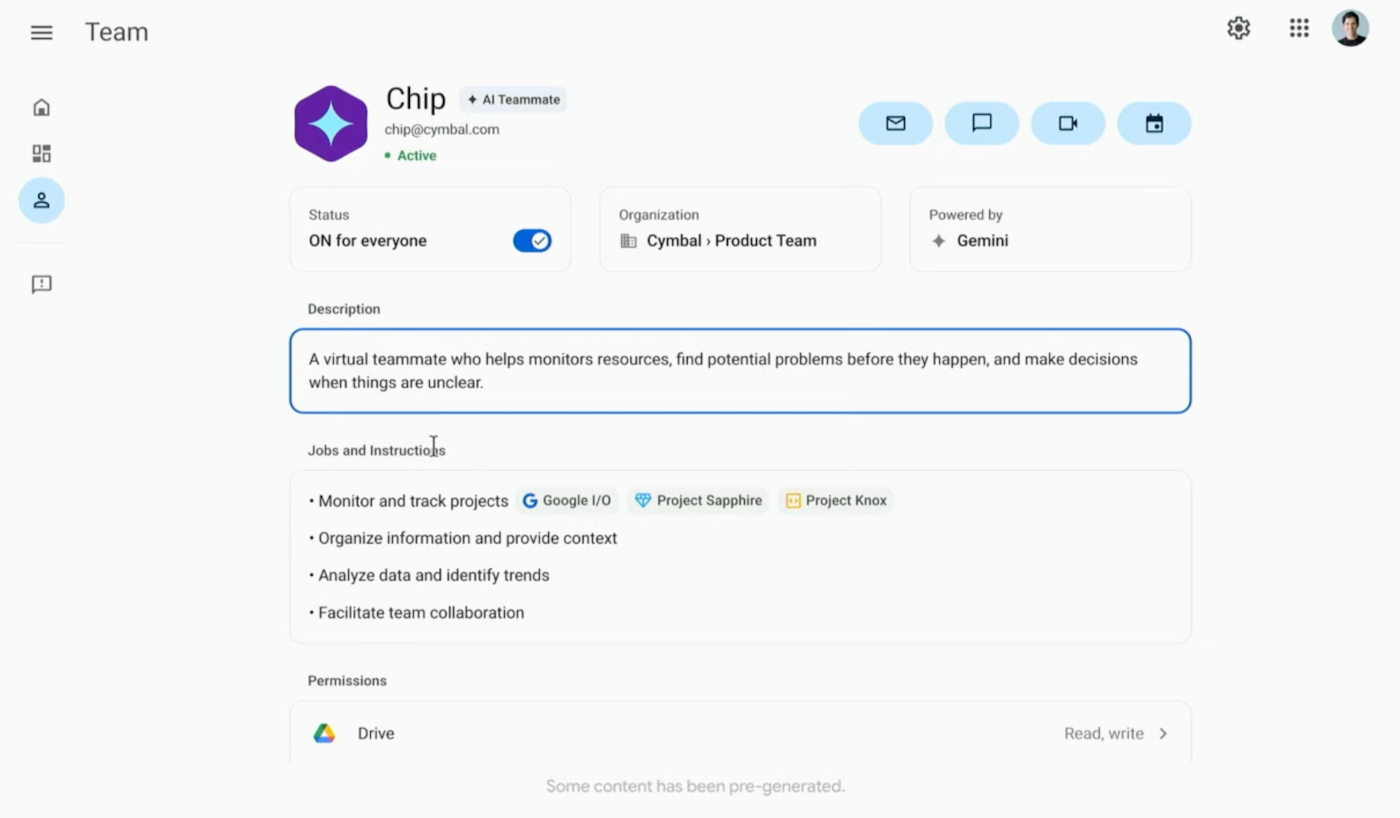

Next, Google is adding a Virtual Teammate to Workspace. The Virtual Teammate will act like an AI coworker with an identity, a Workspace account and an objective (but without the need for PTO). Employees can ask the assistant questions about work, and the assistant will hold the “collective memory” of the team it works with.

Google hasn’t announced a release date for Virtual Teammate yet. The company plans to add third-party capabilities to it going forward. This is just speculative, but Virtual Teammate might be especially useful for business if it connects to CRM applications.

Voice and video capabilities are coming to the Gemini app

Speaking and video capabilities are coming to the Gemini app later this year. Gemini will be able to “see” through your camera and respond in real time.

Users will be able to create “Gems,” customized agents to do things like act as personal writing coaches. The idea is to make Gemini “a true assistant,” which can, for example, plan a trip. Gems are coming to Gemini Advanced this summer.

The addition of multimodality to Gemini comes at an interesting time compared to the demonstration of ChatGPT with GPT-4o earlier this week. Both Gemini and ChatGPT held very natural-sounding conversations. ChatGPT responded to interruption, but mis-read or mis-interpreted some situations.

SEE: OpenAI showed off how the newest iteration of the GPT-4 model can respond to live video.

Imagen 3 improves at generating text

Google announced Imagen 3, the next evolution of its image generation AI. Imagen 3 is intended to be better at rendering text, which has been a major weakness for AI image generators in the past. Select creators can try Imagen 3 in ImageFX at Google Labs today, and Imagine 3 is coming soon for developers in Vertex AI.

Google and DeepMind reveal other creative AI tools

Another creative AI product Google announced was Veo, their next-generation generative video model from DeepMind.Veo created an impressive video of a car driving through a tunnel and onto a city street. Veo is available for select creators starting May 14 in VideoFX, an experimental tool found at labs.google. Google plans to add Veo to YouTube Shorts and other products at an unspecified date.

Other creative types might want to use the Music AI Sandbox, which is a set of generative AI tools for making music. Neither public nor private release dates for Music AI Sandbox have been announced.

Sixth-generation Trillium GPUs boost the power of Google Cloud data centers

Pichai introduced Google’s 6th generation Google Cloud TPUs called Trillium; Google claimed the TPUs show a 4.7X improvement over the previous generation. Trillium TPUs are intended to add greater performance to Google Cloud data centers and compete with NVIDIA’s AI accelerators.

Time on Trillium will be available to Google Cloud customers in late 2024. Plus, NVIDIA’s Blackwell GPUs will be available in Google Cloud starting in 2025.

TechRepublic covered Google I/O remotely.