Financial services institutions have been quick to recognise the opportunities of generative AI for cybersecurity and operational resilience, and are moving quickly in 2024 to become leading light examples of how to practically integrate its potential, according to new research from Splunk.

Splunk’s 2024 State of Security report, based on a survey of 1650 security executives worldwide, found financial services respondents are more optimistic about keeping up with cybersecurity demands thanks to AI, with 50% saying it was easier to keep up this year, compared with 41% across all industries.

The positive outlook comes despite financial services being a ‘high stakes’ industry, where complex cybersecurity and compliance risks – including the possibility of AI attacks from threat actors – requires continual adaptation, said Robert Pizzari, Splunk’s APAC-based Group Vice President Strategic Advisor.

“Asia-Pacific financial services institutions have been early adopters of machine learning over the years,” Pizzari said. “Now we are seeing them become early adopters in the generative AI space as well, which will help them be more productive and respond quicker to potential threats.”

Asia-Pacific financial institutions are embracing generative AI for cybersecurity

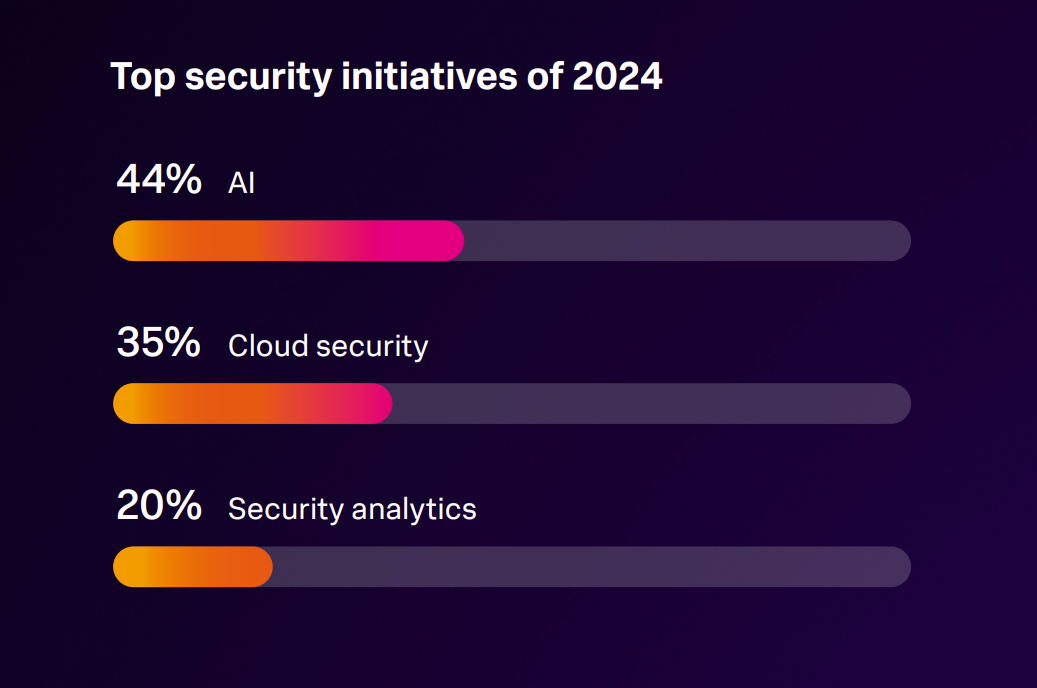

Splunk’s 2024 State of Security report found the use of AI was taking off among security respondents across all industries. AI was named among the top three security initiatives in 2024 by 44% of global respondents, ahead of initiatives in cloud security (35%) and security analytics (20%) (Figure A).

In the Asia-Pacific, research firm IDC has predicted that by the end of 2024, 25% of Asia’s Top 2000 companies would leverage generative AI within their Security Operation Centers, to bolster analyst skills in detecting and responding to threats as well as to improve incident management efficiency.

The financial industry is leading the momentum. One example is Project MindForge, a collaboration between the Monetary Authority of Singapore (MAS) and key financial industry participants and technology companies, exploring the broader opportunities and risks of AI for banks in the region.

DBS Bank, which participated in MindForge and has been scaling AI for five years across multiple use cases, yielded tangible outcomes of US$276 million in 2023. The bank has deployed AI in cybersecurity to detect phishing and fake DBS websites, as well as to detect threat actor behaviour in its environments.

Figure A

Financial services institutions plan to use AI to win the cybersecurity war

Financial services institutions are among those organisations who believe AI will help them win an advantage over cyber adversaries. A CISO report released by Splunk in 2023 found only 17% of respondents thought AI would advantage defenders, but this had increased to 43% by early 2024.

The Commonwealth Bank in Australia is one Asia-Pacific institution that believes AI gives it advantages. The bank is using AI to sort through the 240 billion signals that come into its cyber security practice every week, particularly commodity threats, so analysts can focus on sophisticated threats.

FSIs are particularly confident AI will help with the cybersecurity talent gap. Seventy-one per cent of respondents said AI would make seasoned security professionals more productive, versus 65% across all industries, while 63% said it would help source and onboard talent faster, compared with 58% overall.

“It is a testament to the maturity of financial services institutions that they are using AI creatively, beyond cyber security threat responses, to find and onboard staff faster. This will enable them to upskill their people so they are more capable in addressing the challenges they face,” Pizzari said.

FSIs have put in place strong foundations for cybersecurity best practices

Financial services institutions have more of the foundational building blocks in place for advanced cyber programs. Splunk found these building blocks included resourcing and empowering teams appropriately, collaborating across teams, innovation with generative AI and faster threat detection and response.

For instance, 64% of security teams at financial institutions say they are apt to work more closely with engineering operations on digital resilience initiatives, compared with just 46% across all industries. Splunk said this collaboration between IT and engineering is driving more optimism.

“FSIs have recognised cyber resilience alone does not equal business resilience. To be operationally resilient, you can’t operate in silos; the need to be digitally resilient across all parts of your IT business is key, and FSI security professionals know the only way to do that is to effectively collaborate,” Pizzari said.

Financial services institutions take strategic approach to generative AI

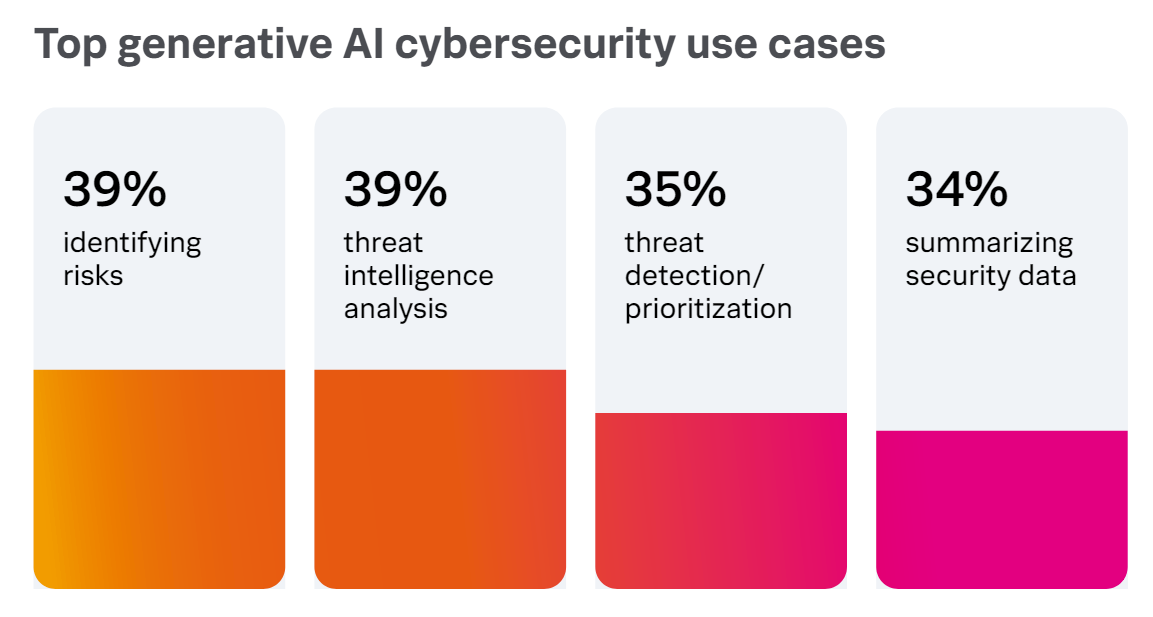

Organisations with advanced security programs are using AI for cybersecurity more frequently. Splunk’s research found the top use cases named for AI were the identification of risks, threat intelligence analysis, threat detection and prioritisation, as well as the summarisation of security data (Figure B).

Those with more mature programs are also more cognizant of the potential risks of using generative AI. In the financial services sector, 76% of respondents said they don’t have enough education to fully understand the implications of generative AI, compared with 65% across all industries surveyed.

Singapore’s MindForge project is a case in point. It explored the risks of financial services AI adoption in the region, including risks to cyber and data security, fairness and bias and transparency and explainability, so that institutions are better able to mitigate risks and capitalise on advantages.

“If you look at banking institutions located in Australia, they are early adopters of generative AI,” said Pizzari. “That being said, they admit they are still in an exploration phase, and therefore are more cautious in how they’re adopting the technology across the business as well.”

Figure B

Cyber threats, regulation among drivers for financial services adoption of AI

Financial services institutions in the Asia-Pacific have traditionally been prime targets for cyber threat actors due to the value of assets and amount of sensitive information they are handling and protecting. Pizzari said this makes “robust cybersecurity absolutely critical just to survive in this space”.

This now includes AI deep fakes. The Financial Services Information Sharing and Analysis Centre reports APAC financial institutions reported the use of deepfakes to mimic senior executives in 2023, while Southeast Asian banks have seen stolen facial recognition data used to commit account fraud.

“The complexity and sophistication of cyber threats targeting the financial sector is more pronounced, and so AI and machine learning can help detect and respond to these in a better fashion,” he said.

Splunk’s 2024 State of Security report found cyber extortion events were more common at financial institutions (54% versus 48% across all industries), and 39% of security professionals in financial services were already rating AI-powered attacks as a top concern, 4% higher than other industries.

There are fears AI could supercharge some common attacks faced by the financial services industry, which is already a top target for fraud. Splunk warns that the top threats for FSI’s continue to include account takeovers, business email compromise, insider threats and social engineering attacks.

Big four accounting firm EY has suggested that financial services CISOs in Asia will respond to AI-powered cybercrime with their own AI investigation copilots, which could improve the consistency of decisions across volumes of data that were “previously unmanageable for humans alone”.

Financial services industry and security professionals under regulatory pressure

Regulation is also driving adoption of AI in financial services. New international rules in the U.S. and the EU, as well as in locations closer to home like Australia, are focusing regional security professionals more on compliance obligations, including material breach reporting (Figure C) and personal liability.

“I don’t know of an industry facing the same regulatory pressure as financial services. FSI institutions face the most stringent requirements, and maintaining security compliance often necessitates the use of advanced security measures, including AI, just to give them an edge to be able to keep up,” Pizzari said.

Figure C

Splunk’s report found many global security professionals believe compliance will change their work, with 87% agreeing they will handle compliance very differently by 2025. Meanwhile, 76% of respondents thought the risk of personal liability was making the cybersecurity profession a less attractive field.

Financial services providing AI cyber security leadership

The APAC financial services industry often has more resources at its disposal than other industries, and can be propelled towards innovation by competition. “There is a lot of healthy competition in financial services, and that is accompanied by a strong drive for innovation and excellence in customer experience within the sector,” Pizzari explained.

This makes financial services a sector to watch for cybersecurity best practice. “Institutions that leverage AI for cybersecurity while taking into account considerations such as the ethical use of AI, can gain a competitive advantage by offering more secure services to customers, which is what they’re after.”

With insights contributed by Harry Chichadjian, FSI & Industry Advisory Lead, APAC and Nathan Smith, Head of Security, APAC.

Access Splunk’s 2024 State of Security report.